When it comes to making decisions related to user experience and web design, A/B Testing can be extremely helpful. If implemented properly, even minor changes—such as modifying the color scheme—can help your website perform better.

In this article, we’ll be discussing A/B Testing in general, as well as taking a look at some of the mistakes or errors that you can avoid when conducting an A/B Test, thereby enhancing the usefulness of your results.

A/B Testing: Introduction

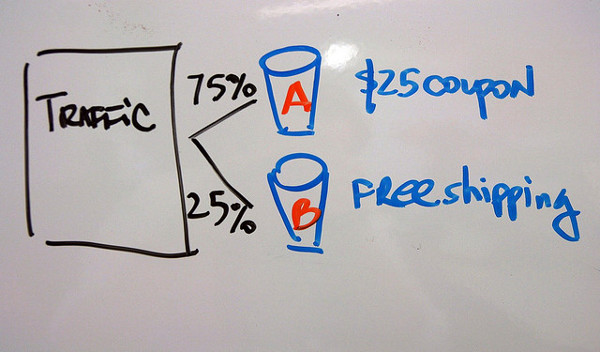

In simple words, A/B Testing (also known as Split Testing) compares two versions of the same element for a given period period of time just to see which version performs better.

For instance, perhaps you are unsure about the location of the Call-to-Action button on your homepage—should it be on the right, or left? Now, in order to conduct an A/B Test and get statistical data, you split your website visitors, so half of them are presented with the Call-to-Action button on the right, and the other half are shown the same button on the left (all other things remaining constant). After some time, you may discover that users who were shown the button on the right clicked on it more than those who saw the button on the left—thereby hinting that a Call-to-Action button on the right is more likely to generate results. You can conduct similar experiments with other aspects of a site, like color schemes and even font styles.

Beyond web design, A/B Testing is also used in numerous industries and markets to assess market growth and conversion rates of sales.

There are numerous tools that help you get the most out of A/B Testing. One of the most popular ones is Visual Website Optimizer, which lets you perform A/B Testing and other related tests on your website.

Plus, if you wish to make informed calculations related to A/B Testing, User Effect has a calculator to help you out. For WordPress users, Convert Experiments is a good plugin to begin with. Other options can be Optimizely (not updated for almost a year, however), and Max A/B (again, not updated for almost two years).

With the introduction out of the way, let us now discuss some of the common mistakes that we can avoid while conducting A/B Tests on our designs.

A/B Testing: Mistakes You Can Avoid

1. Insufficient Sample Size or Testing Period

Generally speaking, sample size is the number of website visitors that are being tested. Thus, insufficient sample size refers to the fact that the number of website visitors that you chose to conduct the A/B Test on was way too small in comparison to your website’s actual traffic (say, your site gets roughly 20,000 monthly visits; in this case, a sample size of 20-30 visitors will be too small to derive any worthy conclusions).

Furthermore, you also need to be wary of the duration for which you will be running the A/B Test. For instance, once again turning towards the Call-to-Action button. You decide to perform a test with the button placed on the right, and you run this A/B Test for a duration of eight hours—and during these eight days, you observe that the conversion rate of your Call-to-Action button has increased by 200%. Amazing, right? Test successful?

Probably not.

Again, owing to insufficient sample size or a rather small testing period, your test results may fail to be accurate. In the long run, once you do implement the changes as per the suggestions of the test results, you may notice that the conversion rate has gone down.

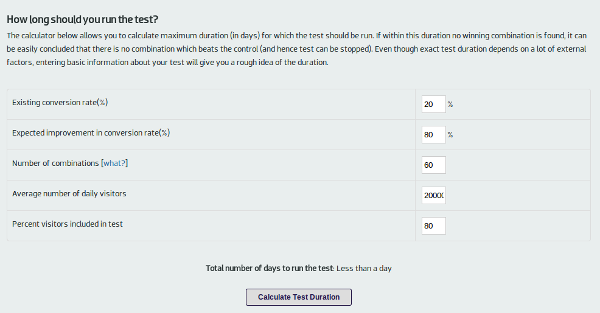

In such cases, it is best to properly calculate the estimated time period and sample size for your A/B Test. A handy tool for this purpose is the Test Duration Calculator, which basically tells you how long you should run your test. This is also known as Statistical Confidence—the practice of having a decent sample of visitors and time frame so that your results are based on legitimate metrics, not chance.

2. Confusing A/B Testing With Multivariate Testing

A/B Testing is best used when you have to test two versions of one variable (again, the placement or color of our Call-to-Action button, for instance—if you wish to find out whether the blue Call-to-Action button gets better response or the green one, consider an A/B Test).

However, often, an A/B Test is employed even when there are more than one variables involved. In such cases, though, you should consider conducting a Multi-Variate Test (henceforth, MVT).

For example, in the case of our Call-to-Action button, consider these two cases:

- Placement only: Right or left

- Color only: Blue or green

- Placement, color and size

- Color and size

The last two cases call for an MVT, not an A/B Test. During an MVT, you may find out that the button performs better when it is placed on the right side of the page, only if it is green and is bigger than the older button. However, an MVT is useful only for websites that have a good amount of traffic because you need to divide your visitors among the multiple versions that are being tested.

3. Unsegmented Sample Size

Carrying on with our example of the Call-to-Action button and its conversion rate, how do you decide what constitutes the sample size? Should you just pick randomly out of all website visitors?

Maybe, but what if your site has visitors who are already loyal customers, and you are conducting the test to attract newer users? In this case, a better solution will be to choose from only new visitors. Thus, by conducting the test on only the new visitors of your website, you can make sure that your results are not diluted due to the clicks of returning visitors who are already your customers (and have gone through the “conversion” process). When you are testing conversion rates, segmenting the sample and targeting just the new visitors is a wonderful idea.

Furthermore, you should also consider being consistent with your A/B Tests. If you have shown the blue Call-to-Action button to one visitor, make sure that he/she sees only the blue one for the entire duration of the test.

Are you planning to conduct an A/B Test on your website? Share your thoughts in the comments below!

Image Credits: Mark Levin | Maricel | Ben Terrett

Sufyan bin Uzayr is a freelance writer and Linux enthusiast. He writes for several print magazines as well as technology blogs, and has also authored a book named Sufism: A Brief History. His primary areas of interest include open source, mobile development and web CMS. He is also the Editor of an e-journal named Brave New World. You can visit his website, follow him on Twitter or friend him on Facebook and Google+.

Sufyan bin Uzayr is a freelance writer and Linux enthusiast. He writes for several print magazines as well as technology blogs, and has also authored a book named Sufism: A Brief History. His primary areas of interest include open source, mobile development and web CMS. He is also the Editor of an e-journal named Brave New World. You can visit his website, follow him on Twitter or friend him on Facebook and Google+.

8 Comments

Join the conversation